Music and AI: Back to the Future

Whether it’s music and AI, or AI and what have you, or even technology altogether, at Visionary Marketing we like to look back in time. A few days ago, while doing the housekeeping of some of our 3,000 articles, we rediscovered this post by Mia Tawile written in July 2016. Eight years is the equivalent of 8 dog years on the Internet, to use that hackneyed motto from the early days of the Web. That is to say, 64 years and 3 months. And at a time when Suno is sending shivers down the spines of every musician on the planet wondering what will become of them, there are two lessons to be learned from this post that demonstrate that we don’t understand the history of innovation as Scott Berkin would have it.

Music and AI: Back to the Future

“Hello dear human friends” is the introduction to this striking video by Laurent Couson, a French composer who analysed the capabilities of Suno, a popular application that lets you compose music of almost any style in 30 seconds. “Before, you had to learn music theory, orchestration and instrumentation – at the very least, ten years of practice – to become an accomplished composer,” he continues. He could have added, “Provided you’re gifted.”

900,000 Pieces of Music per Day

This piece of software,” he went on, “generates 900,000 pieces of music a day, while no well-known composer, not even the most prolific, has 1,000 in his catalogue”. And it’s true that the results are amazing.

We went there too, and as rumours of the death of cyberspace grow louder, we decided to launch Suno on this theme with a song entitled: The Dying Cyberspace.

[Verse]

In a world of bytes and tangled wires

The cyberspace that once glowed with fire (with fire)

Now fades away, its brilliance lost

As darkness falls, at such a cost[Verse 2]

Once a realm of endless possibility

Now echoes silence and fragility

The Internet, a dying art

Fading now, tearing us apart[Chorus]

Oh, the dying cyberspace (cyberspace)

Once so full of life and grace (life and grace)

Now it withers, slowly dies (slowly dies)

Leaving us with empty skies (empty skies)

A very basic prompt

Admittedly, the lyrics are a bit cheesy, but considering the time spent (less than a minute), the result is more than satisfactory. All the more so as the prompt used was really minimal.

A song on the death of the cyberspace, neoclassical

The possibilities are endless with this tool, you can even invent Russian songs in Post-Punk mode. And if you ask Deepl to translate the lyrics, you’ll realise that they’re pretty creative. Maybe not on par with Pushkin, but certainly well above the average of what you hear on Spotify (well I can only surmise because I subscribe to Qobuz).

An enamelled vessel

A window, a bedside table, a bed

It is difficult and uncomfortable to live

But it’s more comfortable to dieЭмалированное судно

Окошко, тумбочка, кровать, –

Жить тяжело и неуютно

Зато уютно умирать

(I can’t guarantee the translation from Russian into English, so I’ll take deepl’s word for it).

According to popular belief, music is linked to mathematics, even if this interconnection is not completely proven. As a result, it’s not totally astounding that a computer manages to do this. In fact, computers have been making music since PopCorn (1969). Note that the dancers are slightly out of sync, no doubt baffled by the technological prowess of the end of that decade.

The First Computer-Generated song

I remember well, when I was 7, the announcement of this song on the radio: the first song produced by a computer. It was extraordinary, and way ahead of its time.

But AI in music also raises a whole range of questions:

- First of all, the machine has virtually every style at its disposal. You can ask it to imitate one without having to master it and especially not by working for 10 years. This raises the question of the value of creation. How much could we pay Mr Couson to produce a song like this? We could even go further, and get Suno – or its clones – to compose a symphony or an opera. It might have to go through a few steps, but it’s a lot less tiring than inventing Einstein on the Beach or Die Zauberflöte from scratch.

- And the associated question: if there is no longer any value in creating music, how many musicians will still have a go at it?

- There also arises, and this is the third point, the question of creation itself. If it’s so easy to create music that isn’t all that bad, aren’t we in danger of going round in circles? Also, can we innovate, in content and form, if the basis is a collection of existing music data? Won’t the novelty wear off? Some would say that this is already the case to some extent, I suppose, but this will just finish the job.

- The training data for these programmes is based on the work of hundreds of thousands of musicians over hundreds of years. It is – as with Midjourney – the plunder of our cultural heritage that raises the question of the protection of intellectual property. Or rather, it might make such IP redundant, unless legal proceedings are successful (but justice is slow, and AIs are fast).

- It also raises the bar for tomorrow’s content creators who will want to show their creativity and beat the machines. This will really demand a lot of imagination.

Democratisation or the end of creation?

When everyone becomes a creator, does that mean that creation no longer exists or, on the contrary, that everyone has become a true creator, even without talent? And is pressing a button and waiting for a program to produce a result a creative act? Is “prompting” sufficient? Tomorrow, will humans become the blue collars of artistic creation whereas machines produce all the thinking?

There are many questions raised. And the undeniable fun that one can have when dealing with this type of programme should not allow us to forget about them. What’s more, it’s a guilty pleasure. If we are endowed with a conscience, it’s hard not to feel, as with tools like Midjourney, one feels as if one were faking artistry.

IA and music: a long-standing innovation

From Gershon Kingsley to Wally Badarou (who was composing on the Mac in the early 90s) to Klaus Schultze (and his fabulous Ludwig Zwei von Bayern with his fully synthesised string orchestra in 1978) or Zoe Keating, who records and plays her sound loops thanks to a pedal connected to a MacBook Pro, artists’ experiments with computer music have been numerous.

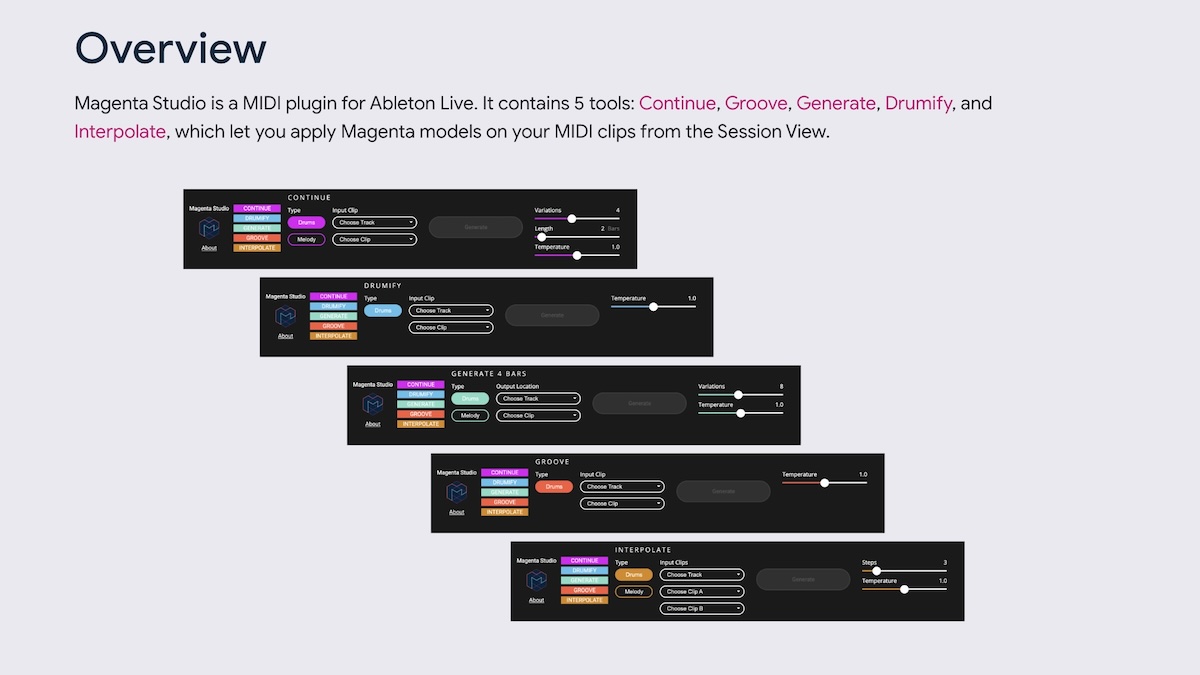

But producing music with AI goes a step further. However, here too, these attempts are not recent. Digging around on this site, we found an old article by Mia Tawile written in 2016 about a Google project named Magenta and of which there are still a few scattered traces on the Internet.

Interesting Examples

There’s also some pretty interesting music here, provided by artificial intelligence, the fruit of Google’s early work in this area. The results are promising but without a future, like so many aborted attempts by this Internet giant, which seems so focused on its business model. So much so that it may be suffering from the innovator’s dilemma.

Example of chamber music produced by Magenta. Not quite Haydn, but it sounds a bit like it (Midi style).

My optimism leads me to believe that we will still need Mr Couson and his colleagues. If only to host concerts. Of course, in these artistic performances, it would not be surprising to find a few computers and loops invented by AI. In this respect, these artists will no doubt be the worthy heirs of the pioneers I mentioned earlier. After all, didn’t musicians like Wim Mertens and Philip Glass imitate repetitive computer music with real instruments? And more recently, haven’t Nils Frahm, Nicklas Paschburg or Grandbrothers included these technologies in their music to the point we end up forgetting about them?

Creators always find a way of circumventing issues like these.

The 2016 original post on AI and Music

Below is Mia’s post from 2016. My two cents about this with nearly 10 years of hindsight.

- Innovation tales time. And no, it won’t happen overnight (lesson number one);

- Google missed the boat (again I daresay), even though the transformer researches were working for Alphabet at the time.

Enjoy the AI Time Machine.

We have all heard of Mozart, Chopin, and Beethoven, but not all of us know Google’s artificial intelligence and its ability to generate music with AI. Yes, a robot has joined the club. And yes, it plays music. (If the song We are the robots by Kraftwerk is playing in your head right now, it is completely normal, don’t worry.) This new robot/artist that creates a lot of debate is called Magenta. You might have seen in my previous article about Facebook’s artificial intelligence how machine learning works on images and videos. This article will describe a concept that is similar yet different. The main question here is: Can you use machine learning to create a music piece? That’s exactly what I will touch on in this article.

Google’s Magenta and its music band

Magenta is Google’s Brain Team project that answers the question mentioned above: Can we use artificial intelligence and machine learning to play music?

Two goals

Google developed this project with two goals. The first is to explore machine learning even deeper and take this concept further. Indeed, this type of artificial intelligence has been used to recognise pictures, speech, and translate content. Facebook too has a similar algorithm that has been used to help blind people hear their newsfeed. This feature is called Facebook Read.

For Artificial Intelligence researchers, the sky is the limit. They always look for new features to develop, and new ways of developing machines.

So why not create algorithms and teach machines how to play the piano, for example? Robots are good students. Indeed, blind tests have shown that people have been fooled by machines: Peter Russel, who is a musicologist, listened to a music piece played by Iamus, a classical music robot.

Surprisingly enough, he did not know it was created by a machine.

Google is inviting people who are interested in this project to join the community, follow the progress. Actually, a part of the project is accessible to the general public, and is waiting for people’s input.

AI Music: Beyond Limits

A lot of people are scared of such technological growth. Well, they might be right. When machines start recognising pictures, and videos, and describe them to us, it means that technology is taking these robots beyond their limits.

The good news is that there is a use to this technology. It is not only developed to win a challenge, or defy the limits of research and technology. As I mentioned earlier, Facebook uses artificial intelligence it to expand its community, without excluding anyone.

When it comes to artificial music, some applications identified this new trend and working around it. There’s a mobile app called @life that plays music according to your state of mind and your mood. You might ask yourself, how does a machine know what one is feeling in real time? The machine gathers information about the person’s behaviour or their location and analyses their mood. Some data analysts use Instagram filters for example, to identify the user’s mood: dark colours reflect sadness, whereas bright colours represent happiness. This music mobile application is said to help people in pain by distracting them and using the popular benefits and virtues of music.

Maybe machines will help us create new music genres, by combining different algorithms. Or maybe this new invention will help people manage their stress or heal their pain, music being the cure to everything! We can see the bud of that technology today with Spotify that can detect your running speed and adapt the music type and tempo.

- Music and AI: Back to the Future - 07/06/2024

- Learning AI with the help of robots - 05/06/2024

- B2B vs. B2C Marketing - 13/05/2024